Migrating GitLab from Bare-Metal to Docker (with reverse proxy and utilizing acme.sh)

I am using GitLab Enterprise Edition (Free Tier) for my coding projects. Recently some of my rented servers had raised their prices and I wanted to check what I can probably consolidate to save a few bucks. In this manner I did some research and I noticed that GitLab can be easily run as a docker container.

Previously my GitLab was hosted on a vServer solely used for collaboration (GitLab, Nextcloud, some docker containers along). The reason for this was, that on one hand gitlab is a quite massive compilation of software. On the other hand it also has a quite big footprint regarding ressources.

Since then GitLab has gotten much better regarding resource usage and the move to docker makes it possible to run GitLab on a shared system (as in other services running as well) much easier because there are no compatibility issues between different software configurations on the same system.

Downsides

Docker itself adds a small layer of complexity. You can not simply run gitlab-ctl right on the system, you must add a few configuration options at two different points, … But in the end these are minimalistic luxury issues. Also docker of course adds a little overhead.

That being said, there are no real downsides if you ever used docker before. There is one more thing to remember though: You can not double-use ports.

The positive thing is: You are adding a layer of security. If someone breaks in into your GitLab instance, the rest of the server is still quite secure.

The plan: GitLab behind a reverse proxy

There are multiple ways to run GitLab in a docker container on any docker host. You can give GitLab an own IP and it will behave as a sole server. You can alos give GitLab different ports (not 22, 80, 443) or simply not use those ports on the host otherwise.

In our case I am configuring the container on a server that also hosts other things – including web services. Ports 22, 80 and 443 are already in use. The plan is to give GitLab a different SSH port – which is not an issue, as only I will be using it for commit/pull. The web frontend however must run on the default ports. To achieve this, we will be setting up the already running Apache webserver to reverse-proxy the GitLab Hostnames to the docker container.

This guide will also work without a reverse proxy. You will only ahve to adapt a few configurations.

Preparation: Updating GitLab

This guide assumes, you are already running a server, having the latest docker engine (including docker compose) installed and Apache2 as a webserver including mod_proxy. It will also work with nginx, using adapted configurations. acme.sh will be used to gain a certificate. As always, the commands are based on a debian server. Other distros might be slightly different.

Before starting the migration, we should make sure that we are running the most recent version of GitLab. If not, update it! Source and Destination GitLab must run the exact same version and the newer, the better, because of fixed issues.

Step 1: Configuring the docker container

On your new server, create a folder that holds your docker-compose.yml

mkdir -p /opt/dockerapps/gitlab

cd /opt/dockerapps/gitlab

nano docker-compose.yml # this will open the nano editor to configure the compose fileNext, copy this content into the nano editor and set up the templated fields accordingly. Especially check the version (18.1.1-ee in this example – might be -ce if you’re migrating a Community Edition GitLab and likely a different version).

Also check the ports. They may not be used by anything else. In my case, I am using 9980, 9943 and 9922. GitLab will run under the FQDN git.example.com

Also remember to update the volume bind mount paths. I am a fan of absolute paths, don’t ask me why.

services:

gitlab:

image: gitlab/gitlab-ee:18.1.1-ee.0

container_name: gitlab

restart: always

hostname: 'git.example.com'

environment:

GITLAB_OMNIBUS_CONFIG: |

# Add any other gitlab.rb configuration that is required at initialization phase (Hostname, ports, ...) here, each on its own line. the gitlab.rb file will take over after initialization so configure it, too!

external_url 'https://git.example.com'

gitlab_rails['gitlab_shell_ssh_port'] = 9922

nginx['ssl_certificate'] = "/etc/gitlab/ssl/gitlab-ssl.key.crt"

nginx['ssl_certificate_key'] = "/etc/gitlab/ssl/gitlab-ssl.key.key"

letsencrypt['enable'] = false

ports:

- '127.0.0.1:9980:80'

- '127.0.0.1:9943:443'

- '9922:22'

volumes:

- '/opt/dockerapps/gitlab/config:/etc/gitlab'

- '/opt/dockerapps/gitlab/logs:/var/log/gitlab'

- '/opt/dockerapps/gitlab/data:/var/opt/gitlab'

- '/opt/dockerapps/gitlab/ssl.pem:/etc/gitlab/ssl/gitlab-ssl.pem:ro'

- '/opt/dockerapps/gitlab/ssl.key:/etc/gitlab/ssl/gitlab-ssl.key:ro'

shm_size: '256m'

healthcheck:

disable: trueNote: We’re not setting any port at “hostname” or “external_url” as we are using a reverse proxy. If you are chosing the non-proxy custom port approach, add the port in “external_url” like external_url ‘https://git.example.com:9943’.

Save the file using Ctrl+X, confirming the save and spin GitLab up for the first time:

docker compose upThe startup will take a few minutes, depending on your server ressources and power.

When startup is done, let it settle for a few minutes and stop it again by pressing Ctrl+C

You should now see the three folders “config”, “logs” and “data” in your folder:

root@hostname:/opt/dockerapps/gitlab# ls

config data docker-compose.yaml logs

Now, we finally only have to remove the redis cache and secrets files:

rm /opt/dockerapps/gitlab/data/redis/dump.rdb

rm /opt/dockerapps/gitlab/config/gitlab-secrets.jsonStep 2: Configuring the reverse proxy

This can be partially skipped, if not using a reverse proxy. The tutorial assumes apache2.

For this step, your DNS entries should already point to the new IP. Also you can skip the additional hostnames, if you are not using the features – or add more.

First, we create a dummy folder

mkdir -p /var/www/proxiedThis folder should not contain any files so far but it must exist for the configuration to work. Now we will configure apache. Create config file in sites-available:

nano /etc/apache2/sites-available/gitlab-proxy.confCopy this content into the file and save it – again adapting hostnames:

<VirtualHost *:80>

#Adapt the hostname

ServerName git.example.com

DocumentRoot "/var/www/proxied"

Redirect permanent / https://git.example.com/

<Directory "/var/www/proxied">

Require all granted

</Directory>

</VirtualHost>

<VirtualHost *:443>

#Adapt the hostname

ServerName git.example.com

#Adapt the wildcard hostname or add explicit hostnames like registry, ... Remove if unneeded.

ServerAlias *.git.example.com

#Create a quite secure SSL configuration. Adapt to your needs or leave as it is.

SSLEngine on

SSLProtocol all -SSLv2 -SSLv3 -TLSv1.0 -TLSv1.1

SSLCipherSuite TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256:TLS_AES_128_GCM_SHA256:EECDH+AESGCM:EDH+AESGCM

SSLCertificateFile /opt/dockerapps/gitlab/ssl.pem

SSLCertificateKeyFile /opt/dockerapps/gitlab/ssl.key

HostnameLookups Off

UseCanonicalName Off

ServerSignature Off

ProxyRequests Off

ProxyPreserveHost On

ProxyTimeout 900;

#We enable SSLProxy but we don't check the certificate on backend

#because we are using localhost and the public certificate of the real domain.

SSLProxyEngine On

SSLProxyCheckPeerCN Off

SSLProxyCheckPeerName Off

#Do NOT proxy ACME - We handle this locally

ProxyPass /.well-known !

#Proxy anything else - Adapt the port 9943 here to your setup

ProxyPass / https://127.0.0.1:9943/ timeout=900 Keepalive=On

DocumentRoot "/var/www/proxied"

<Directory "/var/www/proxied">

Require all granted

</Directory>

</VirtualHost>We need to create a temporary dummy certificate to allow apache to serve. There are other ways but this is the easiest one to describe here:

openssl req -new -newkey rsa:2048 -days 7 -nodes -x509 \

-subj "/C=XX/ST=Dummy/L=Dummy/O=Dummy/CN=git.example.com" \

-keyout /opt/dockerapps/gitlab/ssl.key -out /opt/dockerapps/gitlab/ssl.pemAfterwards, reload the webserver:

service apache2 reloadYou should now be able to browse to https://git.example.com and receive a 502 error page after confirming a certificate warning. Verify this before proceeding!

Next step is requesting a certificate from StartSSL or any other ACME CA using acme.sh – Assuming this tool is already installed and configured on your system, adapt and run this command. Ensure, you’re listing all relevant subdomains. Make sure that all these domains are already pointing to the new server and are configured in apache:

acme.sh --issue -d git.example.com -d registry.git.example.com --webroot /var/www/proxied/When the certificate has been issued successfully, you can instruct acme.sh to install it into the desired location and automatically reload the services:

acme.sh --install-cert -d git.example.com --key-file /opt/dockerapps/gitlab/ssl.key \

--fullchain-file /opt/dockerapps/gitlab/ssl.pem \

--reloadcmd "service apache2 reload && cd /opt/dockerapps/gitlab/ && docker compose exec gitlab gitlab-ctl restart nginx"You will now get an error at the reload stage because the docker container is not running. This is expected.

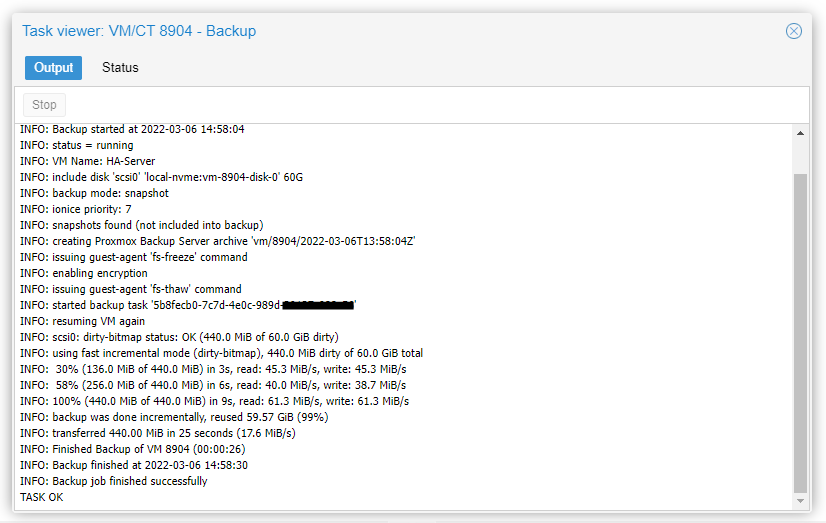

Step 3: Backing up and shutting down the old server

Beginning with this step, your old gitlab instance will be down (if not already because of the IP change) and it will take some time – depending on the size of your instance.

Create a snapshot or full backup before proceeding

On the source server, log in to GitLab Admin panel and disable periodic background jobs:

On the left sidebar, at the bottom, select Admin. Then,

on the left sidebar, select Monitoring > Background jobs.

Under the Sidekiq dashboard, select Cron tab and then Disable All.

Next, wait for Sidekiq jobs to finish:

Under the already open Sidekiq dashboard, select Queues and then Live Poll. Wait for Busy and Enqueued to drop to 0. These queues contain work that has been submitted by your users; shutting down before these jobs complete may cause the work to be lost. Make note of the numbers shown in the Sidekiq dashboard for post-migration verification.

You can probably skip these steps, if you are confident that nothing is pending.

Next, save redis cache to disk and shut down most gitlab services by running the following command:

sudo /opt/gitlab/embedded/bin/redis-cli -s /var/opt/gitlab/redis/redis.socket save && \

sudo gitlab-ctl stop && sudo gitlab-ctl start postgresql && sudo gitlab-ctl start gitalyGitLab will now run in a very minimal configuration, only ready to create a backup. To do this, execute

sudo gitlab-backup createThis will take some time. When ready, the backup can be found in GitLab’s backup folder – by default this is /var/opt/gitlab/backups

Before proceeding, we will make sure that GitLab can not start anymore. To do this, edit /etc/gitlab.rb and add this to the very bottom:

### Migration overrides:

alertmanager['enable'] = false

gitlab_exporter['enable'] = false

gitlab_pages['enable'] = false

gitlab_workhorse['enable'] = false

grafana['enable'] = false

logrotate['enable'] = false

gitlab_rails['incoming_email_enabled'] = false

nginx['enable'] = false

node_exporter['enable'] = false

postgres_exporter['enable'] = false

postgresql['enable'] = false

prometheus['enable'] = false

puma['enable'] = false

redis['enable'] = false

redis_exporter['enable'] = false

registry['enable'] = false

sidekiq['enable'] = falseAnd then run gitlab-ctl reconfigure

Now you must transfer the redis file, the secrets and the backup to your new server. For example using scp (run these commands on the old server one by one and adapt the hostname).

The example is using root to authenticate – which is hopefully wrong in your case. Use your username instead if applicable.

sudo scp /var/opt/gitlab/redis/dump.rdb \

root@git.example.com:/opt/dockerapps/gitlab/config/dump.rdb

sudo scp /etc/gitlab/gitlab-secrets.json \

root@git.example.com:/opt/dockerapps/gitlab/config/gitlab-secrets.json

#You might want to explicitly select the specific file to save time, space and bandwidth

sudo scp /var/opt/gitlab/backups/*.tar \

root@git.example.com:/opt/dockerapps/gitlab/data/backups/You can also referr to the official docs: https://docs.gitlab.com/administration/backup_restore/migrate_to_new_server/

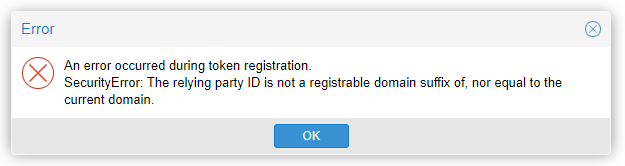

Step 4: Configuring the all-new Docker-GitLab

Now, that we have backed up and shut down the old instance and transferred our backups to the new server, we can continue by configuring the new GitLab.

This is probably the most complicated part and you’re widely on your own with it. While copying the old gitlab.rb file (without the modifications above) to the new location could technically work, it’s bes to review the old file and reconfigure the new one cleanly. Remember: How old is your old configuration? Did you always check the upgrade guides regarding gitlab.rb? 🙂

I will only walk through the importand and required configuration. Most importantly make sure, that you are setting any variable that also is in the docker-compose.yml to the same value in gitlab.rb

Open the config file by running nano /opt/dockerapps/gitlab/config/gitlab.rb (or opening the file for example with notepad++ or any other preferred editor)

Then find all lines by searching the variable name, removing the hash-sign at the beginning of the line and then adjust their parameters like the following examples (and your further needs):

external_url 'https://git.example.com'

[...]

gitlab_rails['gitlab_shell_ssh_port'] = 9922

[...]

nginx['ssl_certificate'] = "/etc/gitlab/ssl/gitlab-ssl.pem"

nginx['ssl_certificate_key'] = "/etc/gitlab/ssl/gitlab-ssl.key"

[...]

letsencrypt['enable'] = falseIf using the container registry also change these:

registry_external_url 'https://registry.git.example.com'

#The following lines are crucial for registry but have to be ADDED to gitlab.rb.

#They are not included by default. Add them below

# registry_nginx['listen_port'] = 5050

registry_nginx['ssl_certificate'] = "/etc/gitlab/ssl/gitlab-ssl.pem"

registry_nginx['ssl_certificate_key'] = "/etc/gitlab/ssl/gitlab-ssl.key"Step 5: Restoring the backup to the new GitLab instance

Now we can finally spin up the GitLab docker container and import the data back to it. Make sure that the copied files are at their desired locations (gitlab-secrets, redis.rdb and the backup file), then spin up the container on the target server:

cd /opt/dockerapps/gitlab

docker compose up -dGitLab will now start up in the background. It will take a few minutes even though the process already returns. You can watch the startup by running docker compose logs -f

When GitLab is started up, we can restore the backup by running the following commands one by one:

# Stop the processes that are connected to the database

docker compose exec gitlab gitlab-ctl stop puma

docker compose exec gitlab gitlab-ctl stop sidekiq

# Verify that the processes are all down before continuing - check puma and sidekiq

docker compose exec gitlab gitlab-ctl status

# Start the restore process. Check the correct filename, omitting the ending!

docker compose exec gitlab gitlab-backup restore BACKUP=1751836179_2025_07_06_18.1.1-eeGitLab will now show a warning before the restore process is started. Confirm it with “yes”. There will be a few warnings you have to confirm during the process, so keep it monitored.

When the restore is done, stop gitlab and start it again in foreground mode. This helps to observe critical errors. It is expected behavior, that there will be warning s and a few errors during this startup. Many of these are actually normal.

docker compose down

docker compose upAfter GitLab has started up successfully, check reachability on the web and give it a few minutes to settle.

Afterwards hit Ctrl+C to shut GitLab down again. We have to remove the following two lines from docker-compose.yml:

healthcheck:

disable: trueNow we can finally start GitLab up and start using it. Remember to change the remotes of your projects, if the SSH-Port has changed.

docker compose up -dAfter a few last minutes, GitLab should be up and running.

As a last step, go back to the Admin Panel and re-enable all background jobs.

Time for coffee

…or an energy drink. Was quite some work, right? You should now have sucessfully migrated GitLab from a Bare-Metal installation to a modern docker container.

Did everything work for you? Are you using any features that required additional configuration?

The featured image of this blog post has been genereted by AI